I tend to use Keras when doing deep learning, with tensorflow as the back-end. This allows use of tensorboard, a web interface that will chart loss and other metrics by training iteration, as well as visualize the computation graph. I noticed tensorboard has an area of the interface for showing different runs, but wasn’t able to see the different runs. Turns out I was using it incorrectly. I used the same directory for all runs, but to use it correctly you should use a subdirectory per run. Here’s how I set it up to work for me:

First, I needed a unique name for each run. I already had a function that I used for naming logs that captures the start time of the run when initialized. Here’s that code:

Then, I used that to create a constant for the tensorboard log directory:

Finally, I run tensorboard on the parent directory, without the unique run name:

If you’re wondering why I pass the host parameter to explicitly be all hosts, this is so that it works when running on a cloud GPU server.

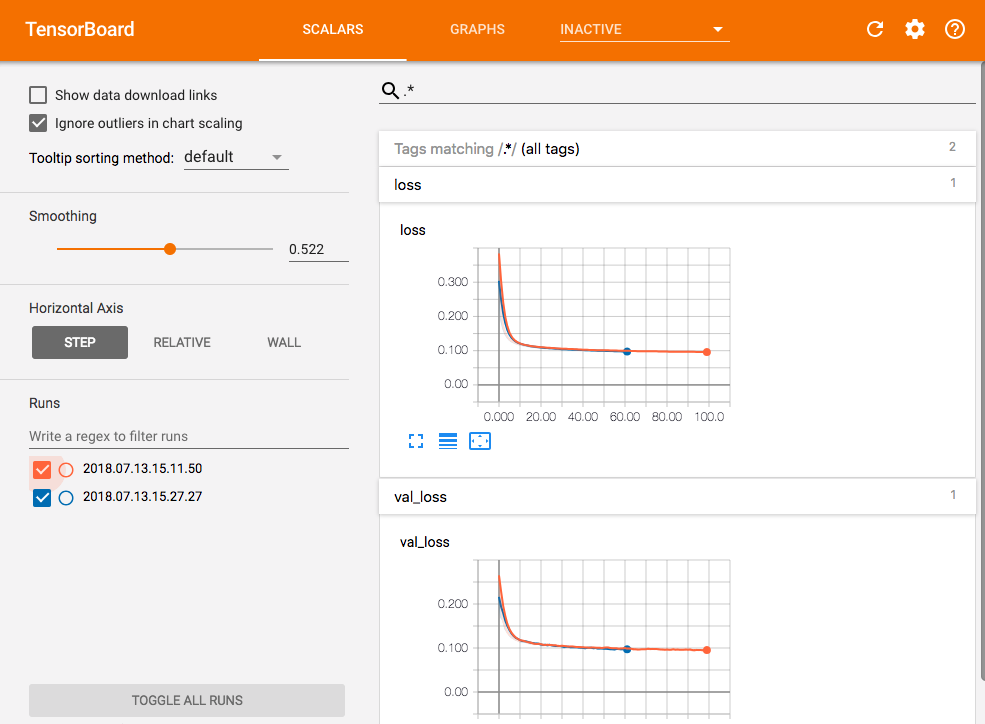

You’ll now see each subdirectory as a unique run in the interface:

That should do it. Comment if you have questions or feedback.